Diffusion models trained on large datasets capture expressive image priors which have been used for generating images, predicting geometry (depth, surface normals) from images, and for inverse problems like inpainting. However, these tasks typically require a separate model, eg. one each for generating images, depth prediction, and surface normal prediction. In this paper, we propose a novel image diffusion prior that jointly encodes appearance and geometry.

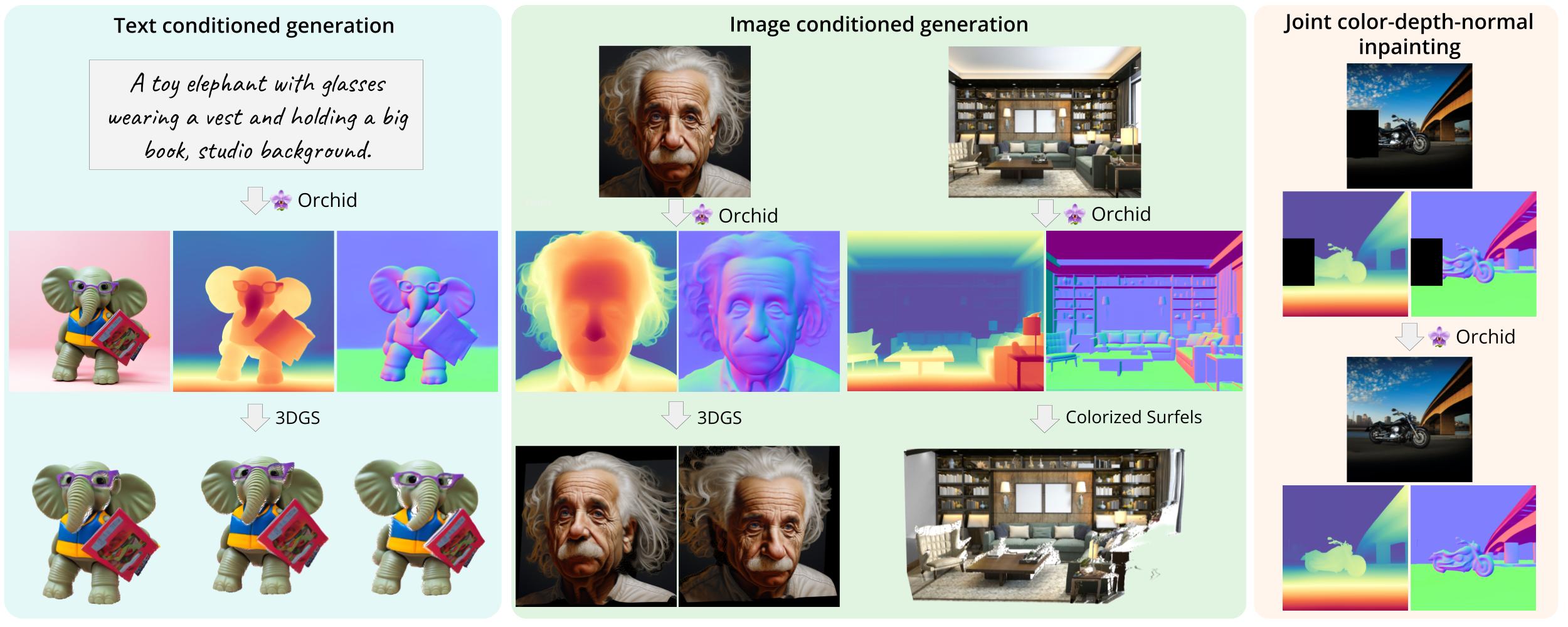

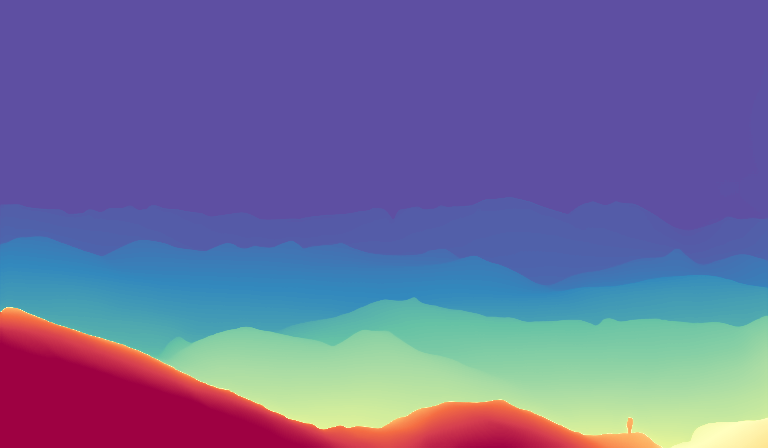

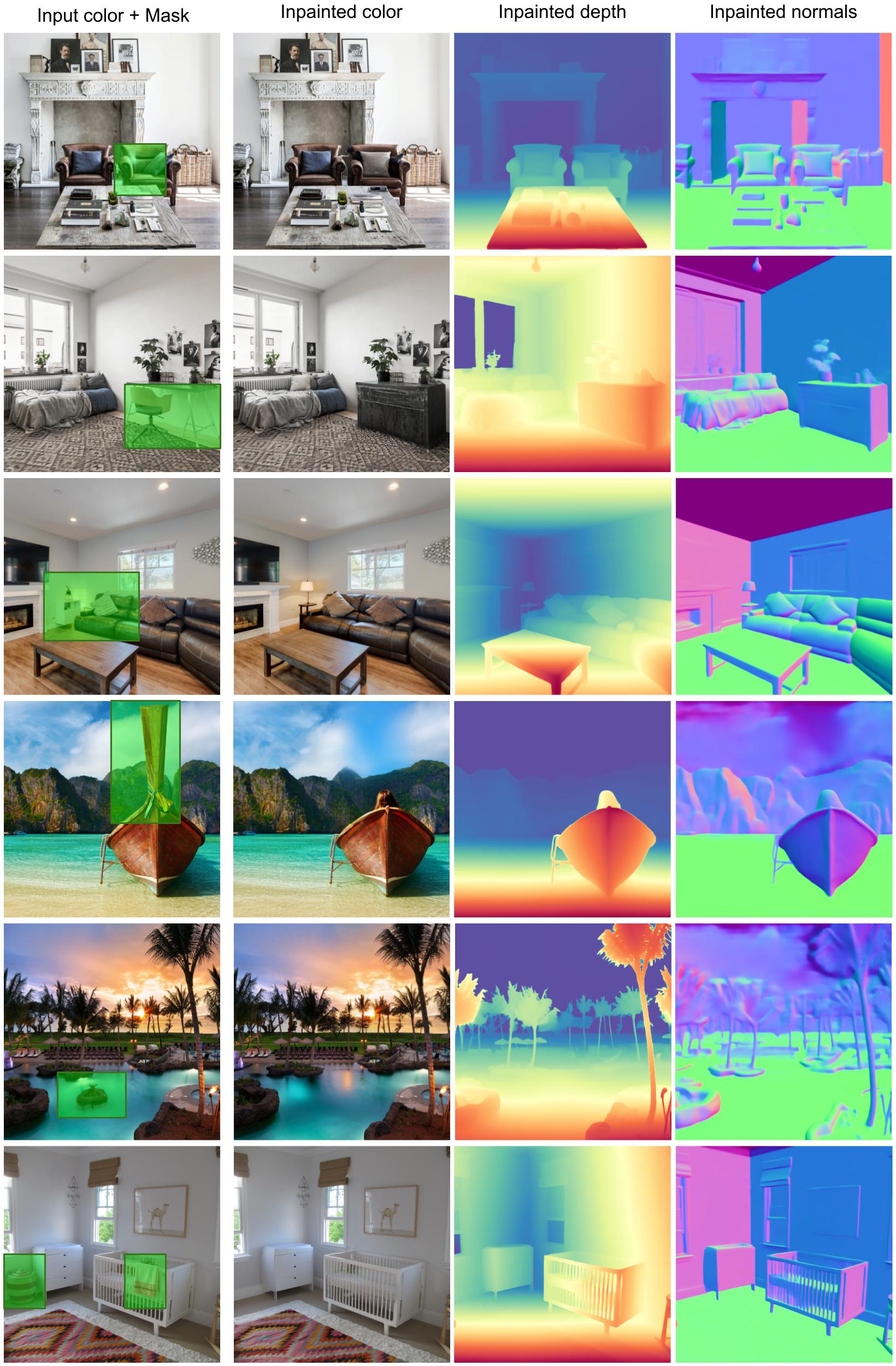

We introduce a diffusion model Orchid, comprising a Variational Autoencoder (VAE) to encode color, depth, and surface normals to a latent space, and a Latent Diffusion Model (LDM) for generating these joint latents. Orchid directly generates color, relative depth, and surface normals from text, and can be used to seamlessly create image aligned partial 3D scenes. It can also perform image-conditioned tasks like monocular depth and normal prediction jointly and is competitive in accuracy to state-of-the-art methods designed for those tasks alone. Since our model learns a joint prior, it can be used zero-shot as a regularizer for many inverse problems that entangle appearance and geometry. For example, we demonstrate its effectiveness for joint color-depth-normal inpainting, showcasing its applicability as a prior for sparse view 3D generation.

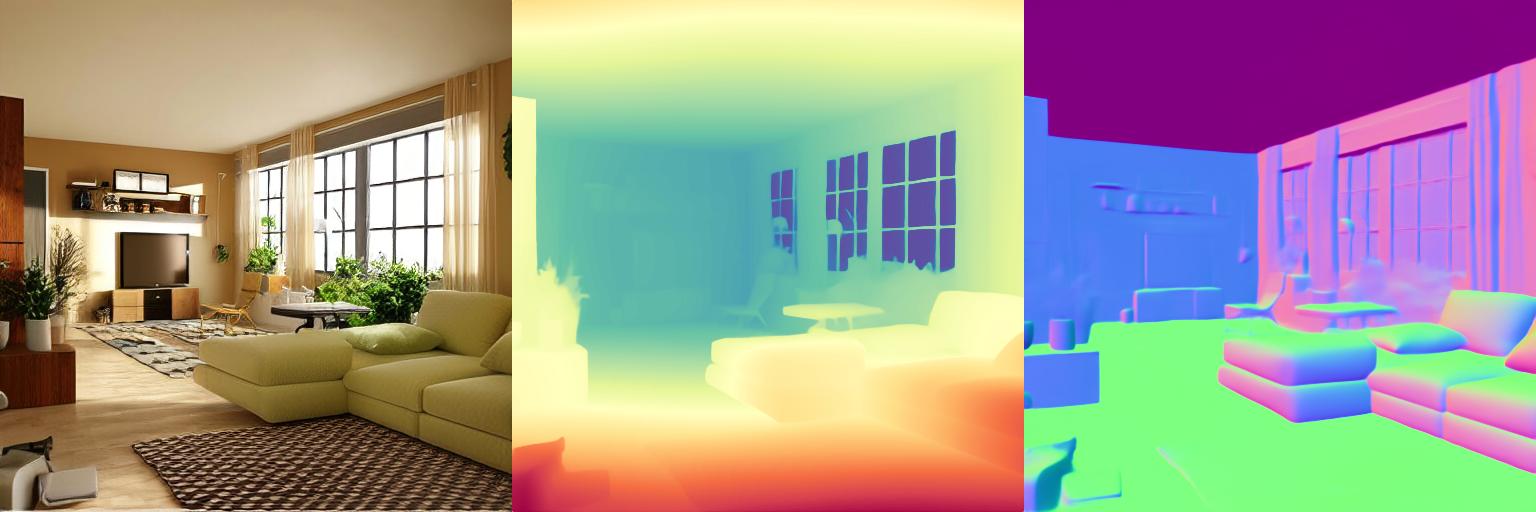

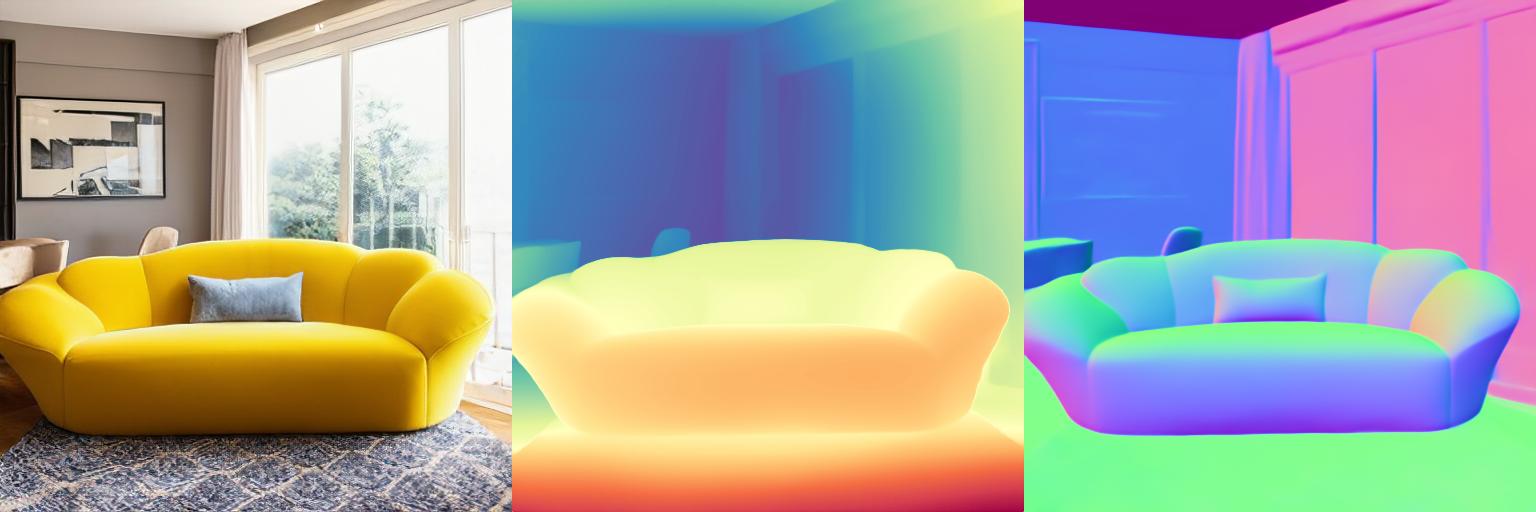

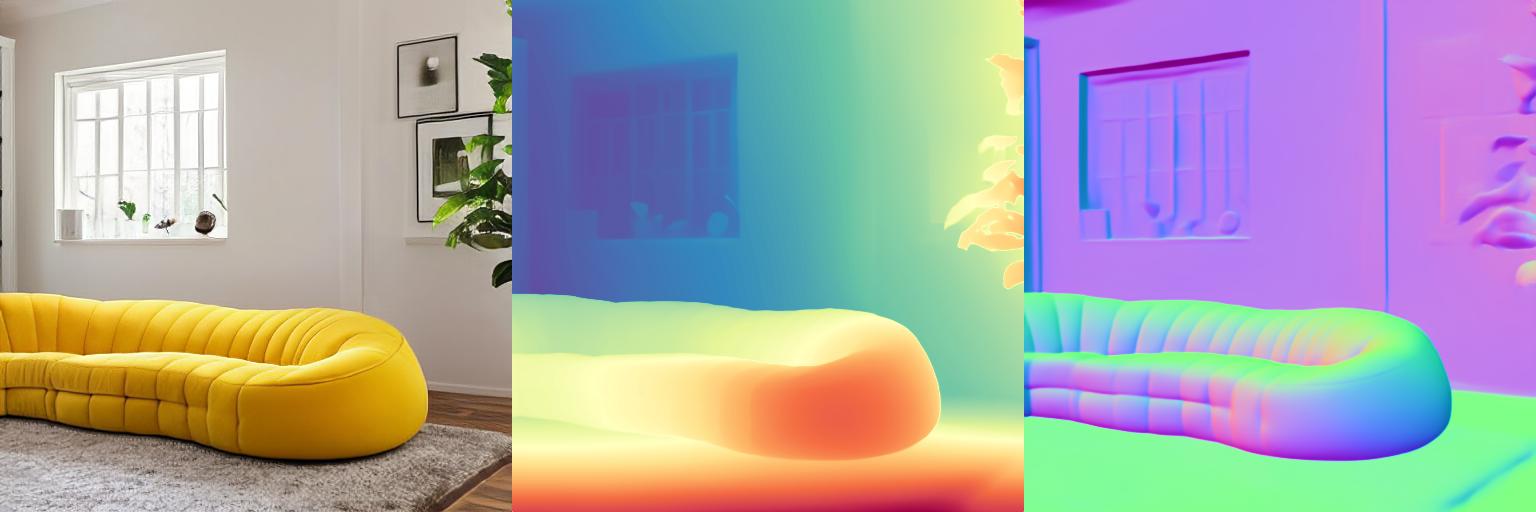

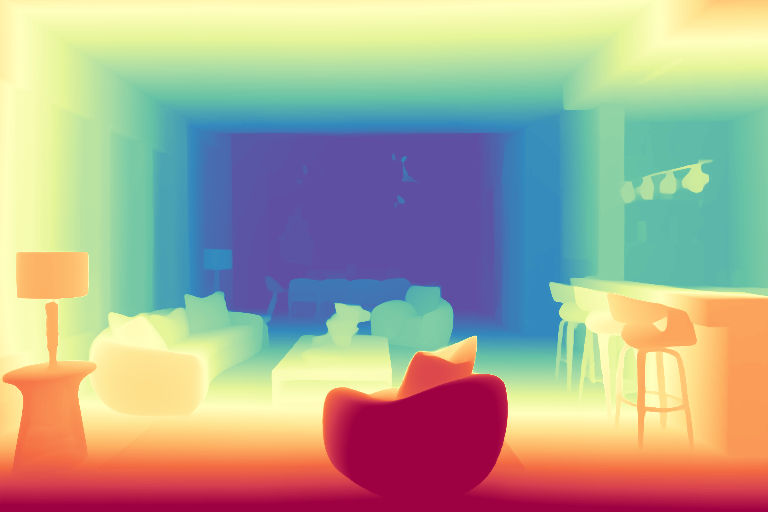

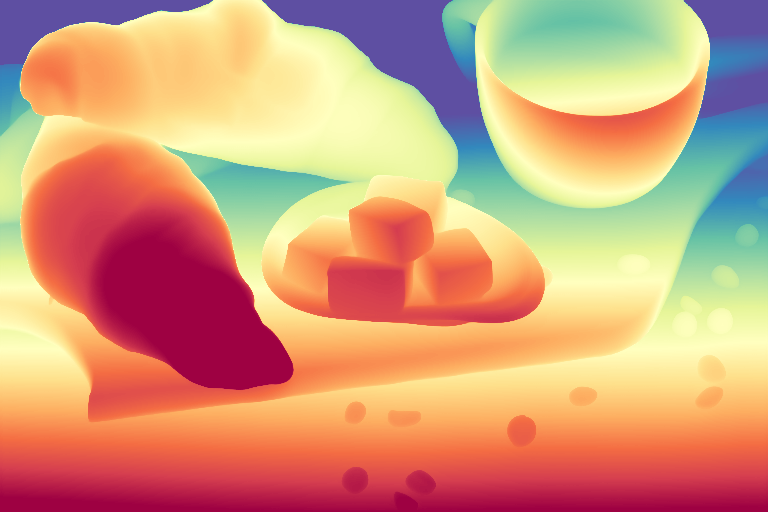

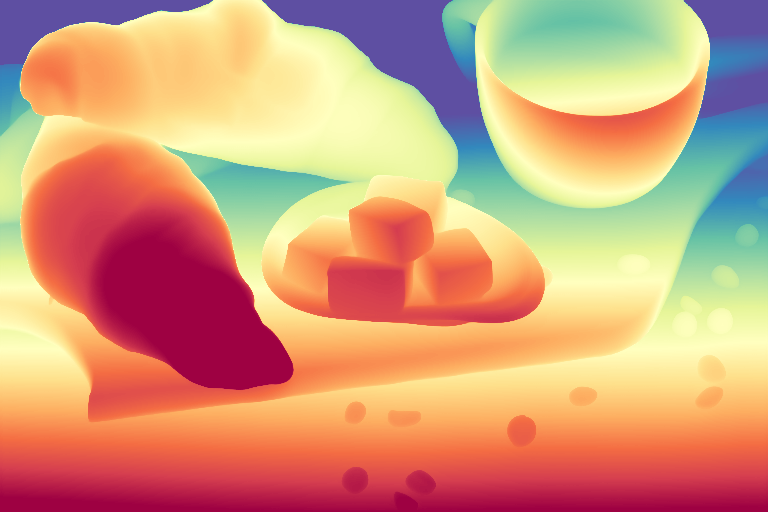

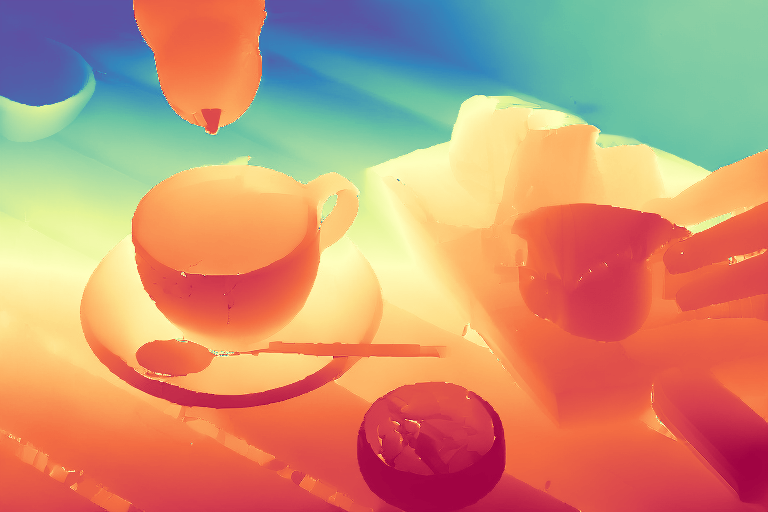

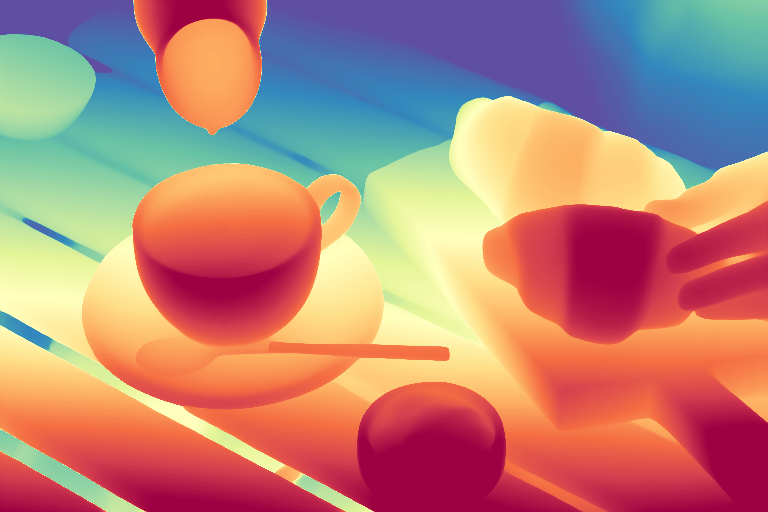

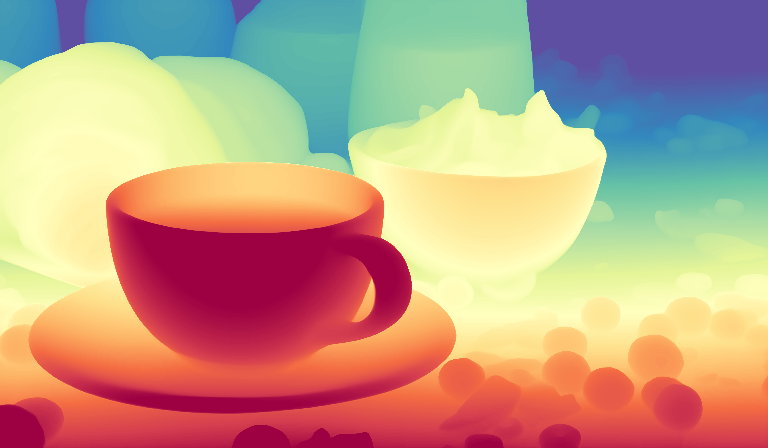

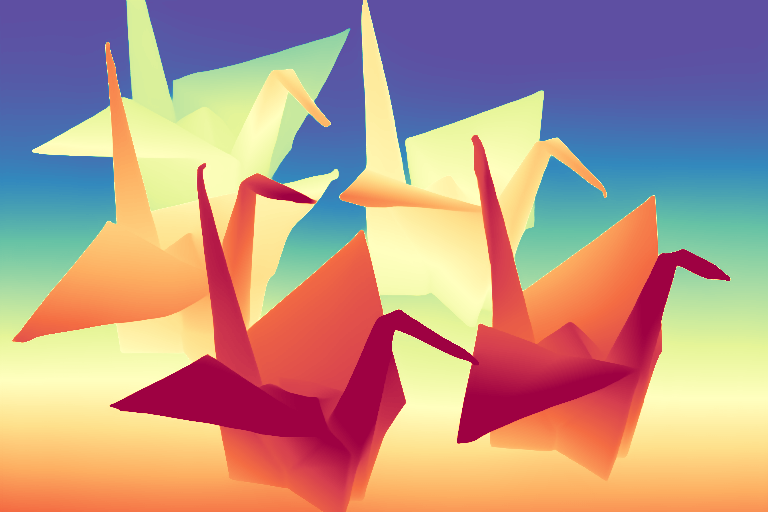

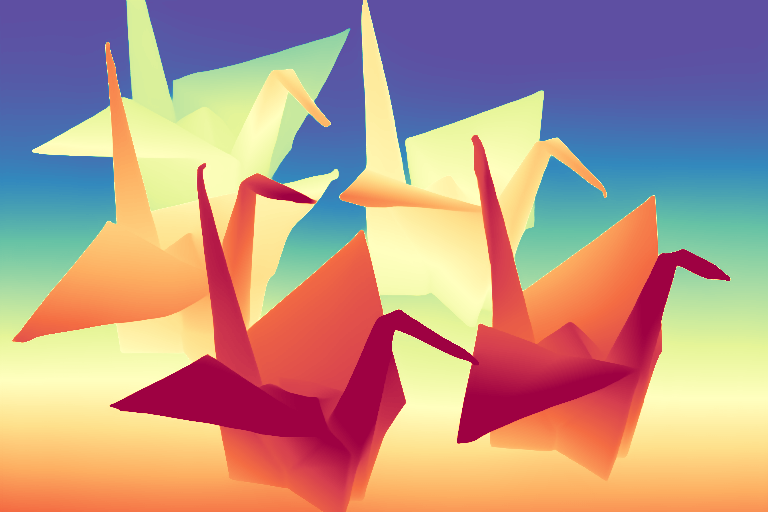

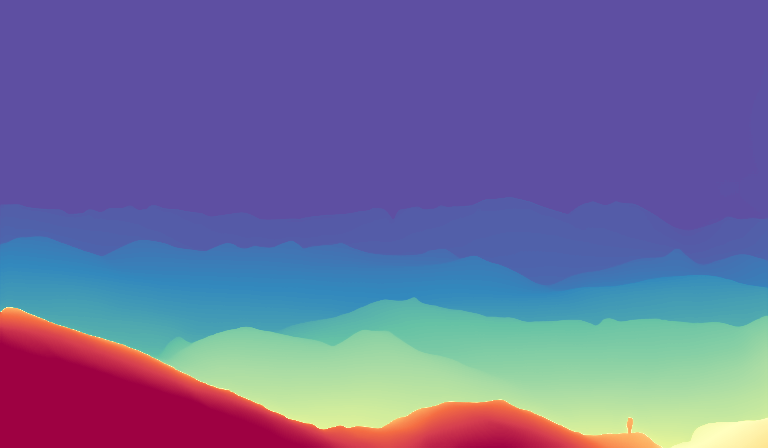

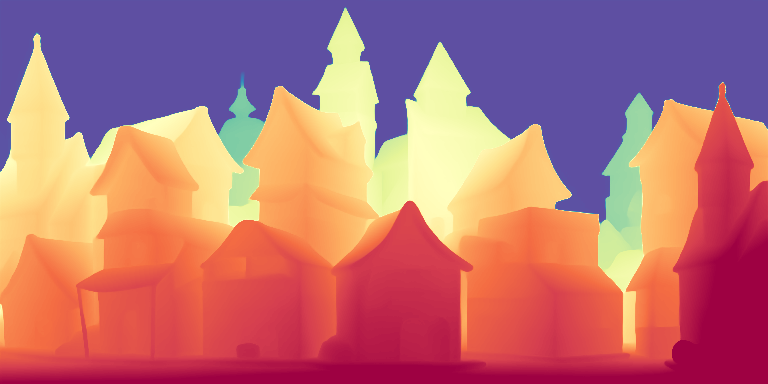

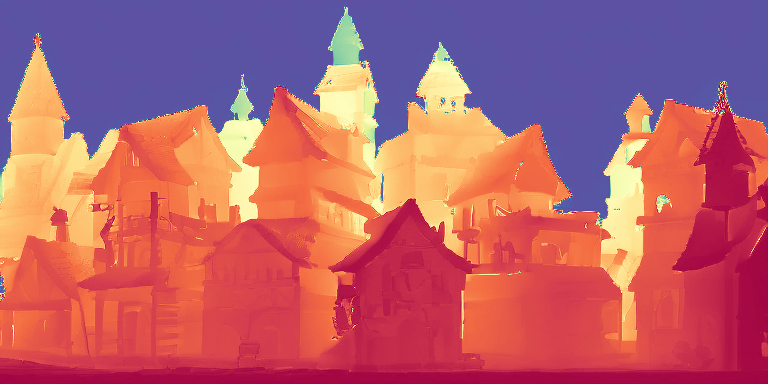

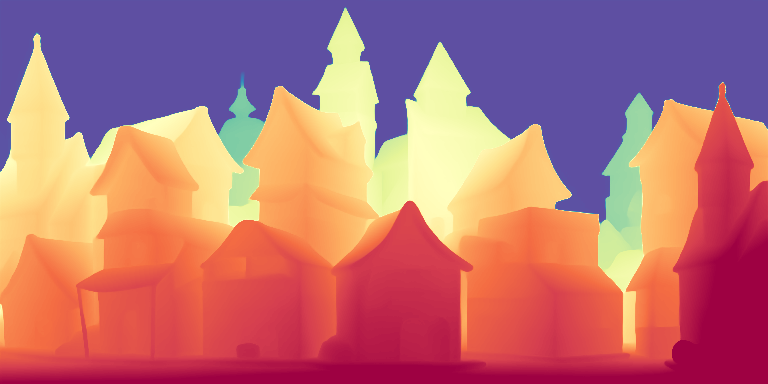

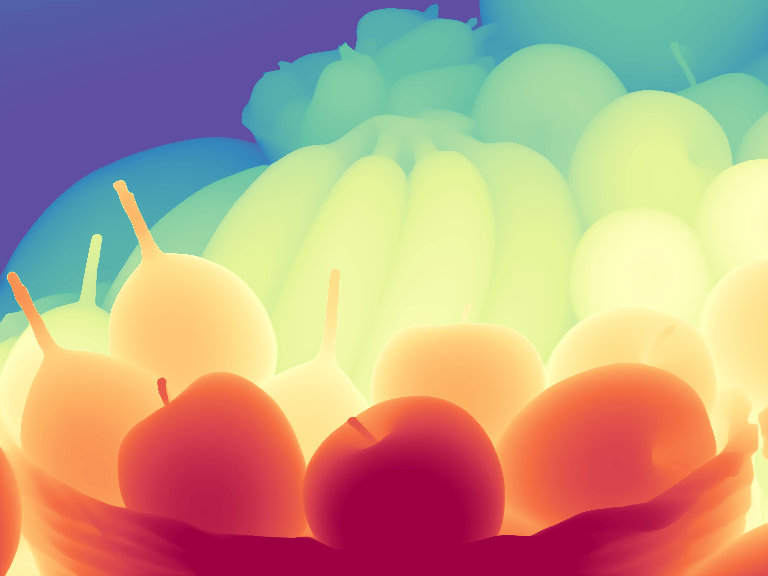

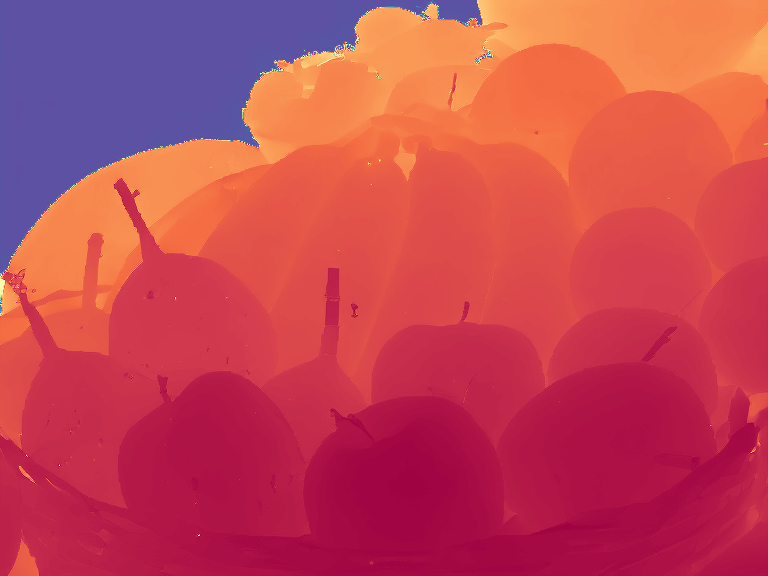

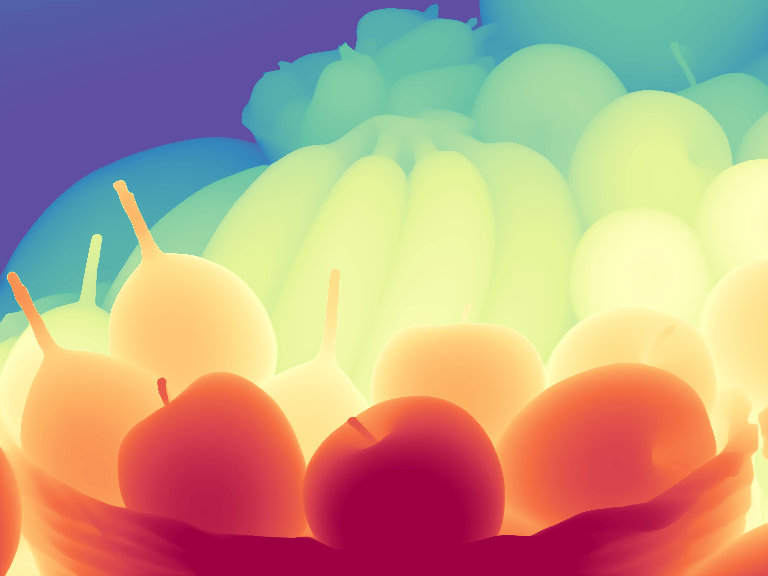

Orchid can jointly generate color, depth and surface normal images from a text prompt in a single diffusion process.

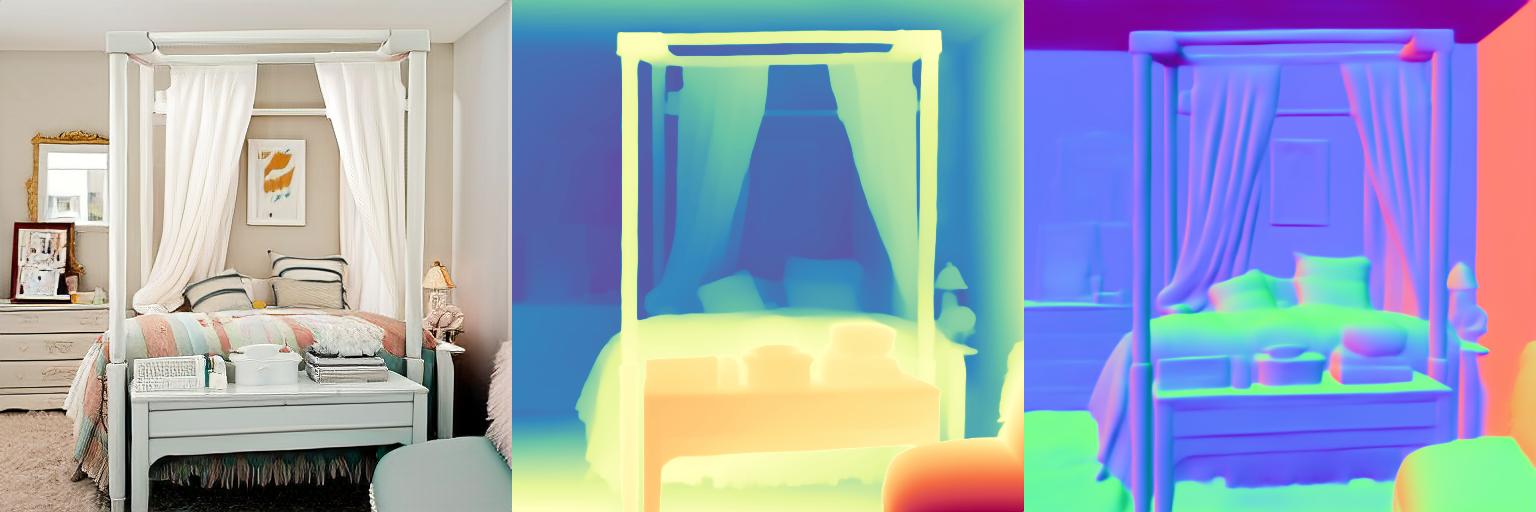

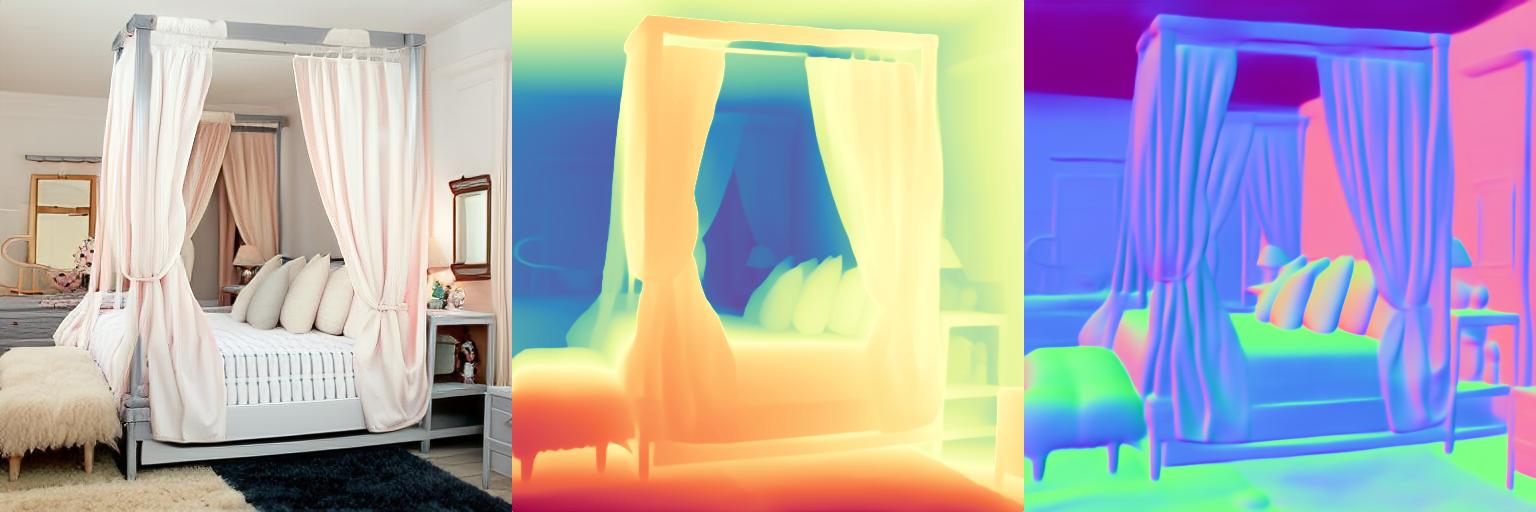

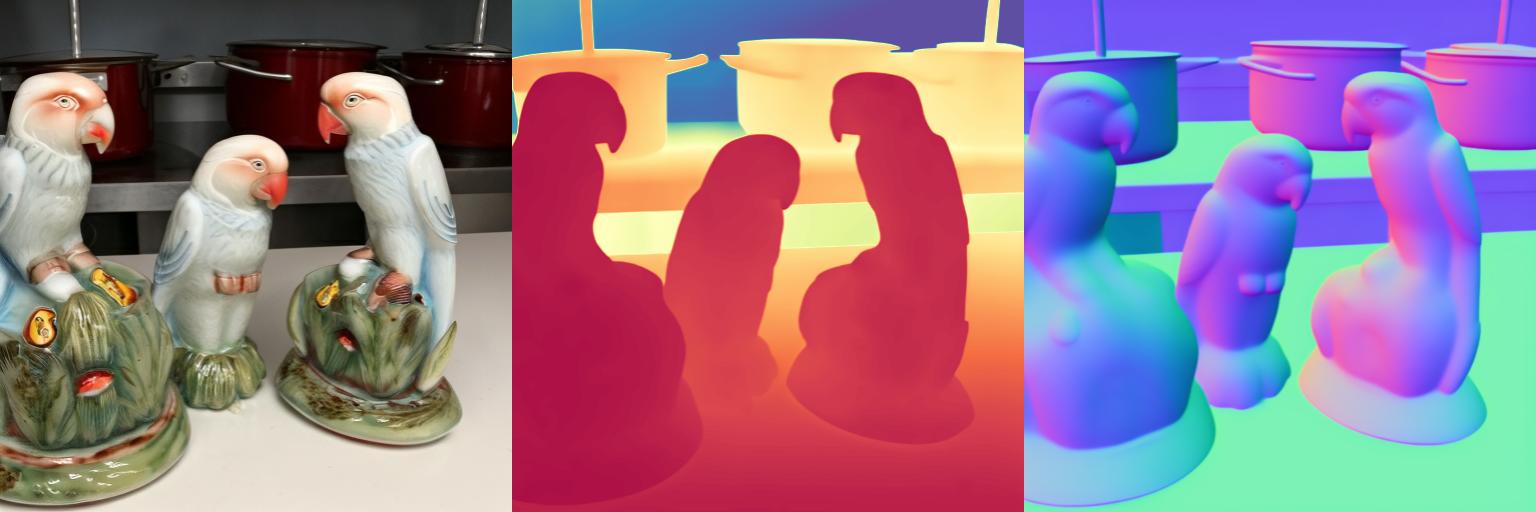

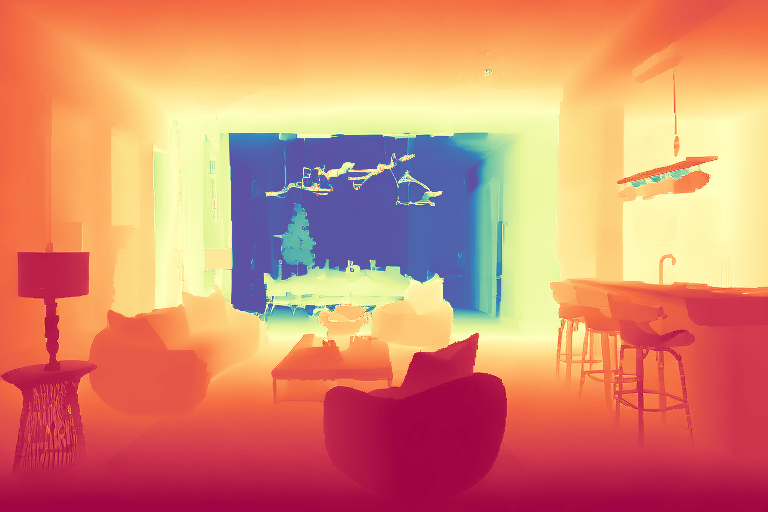

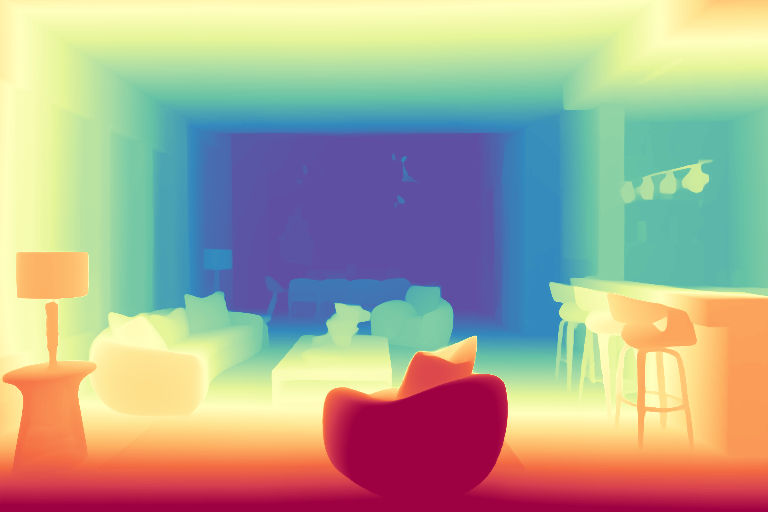

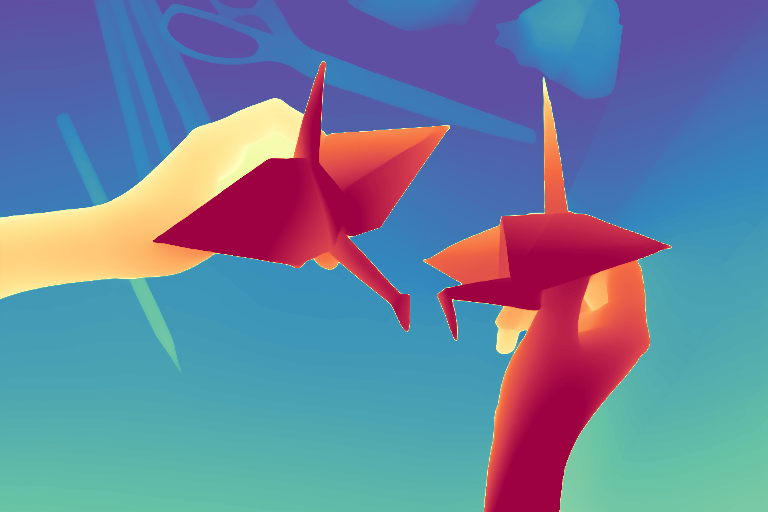

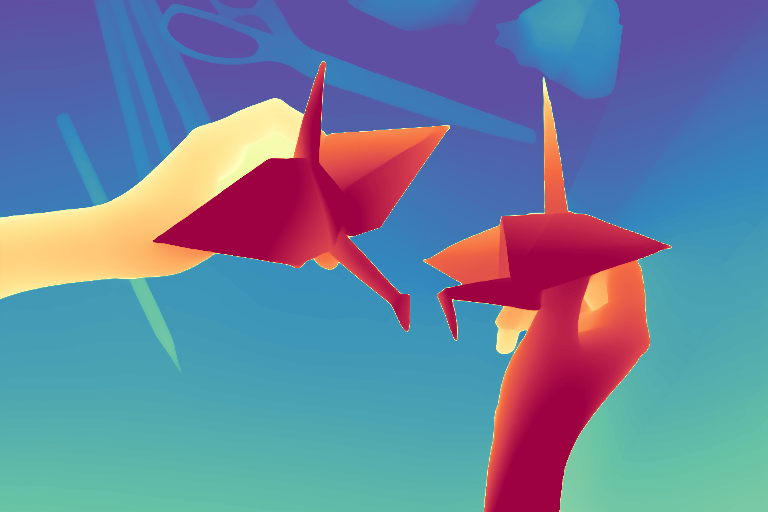

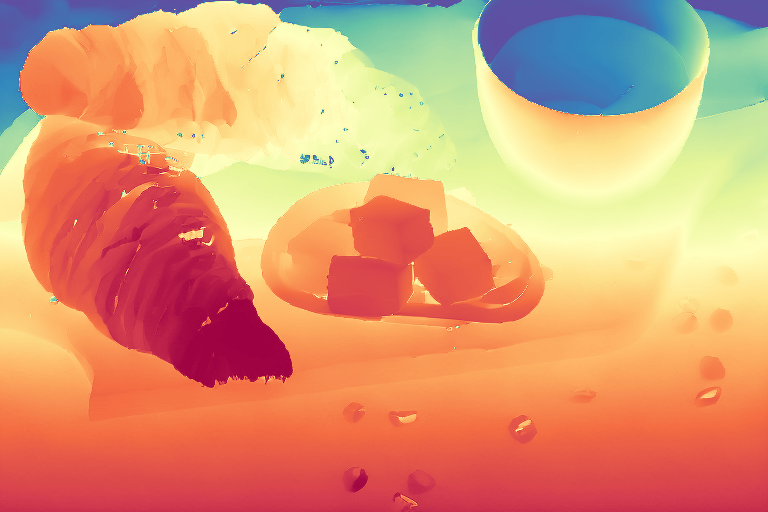

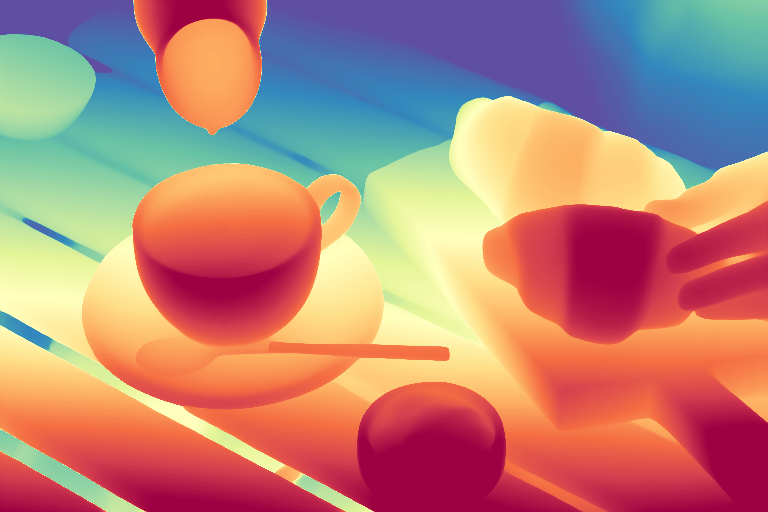

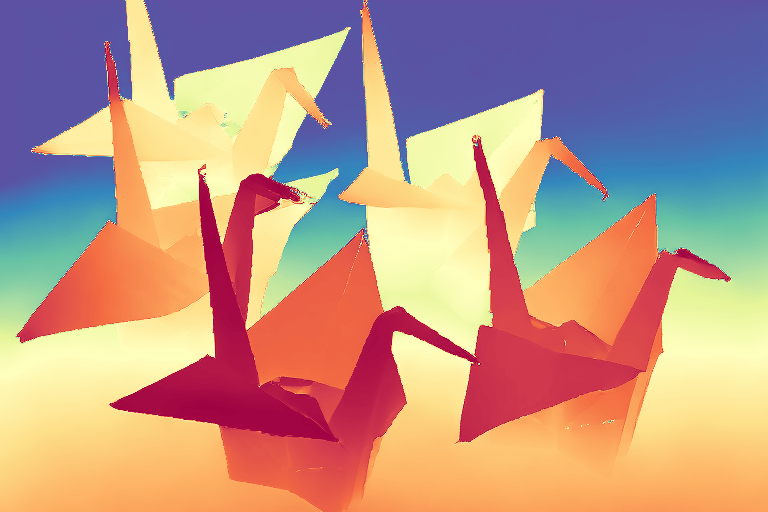

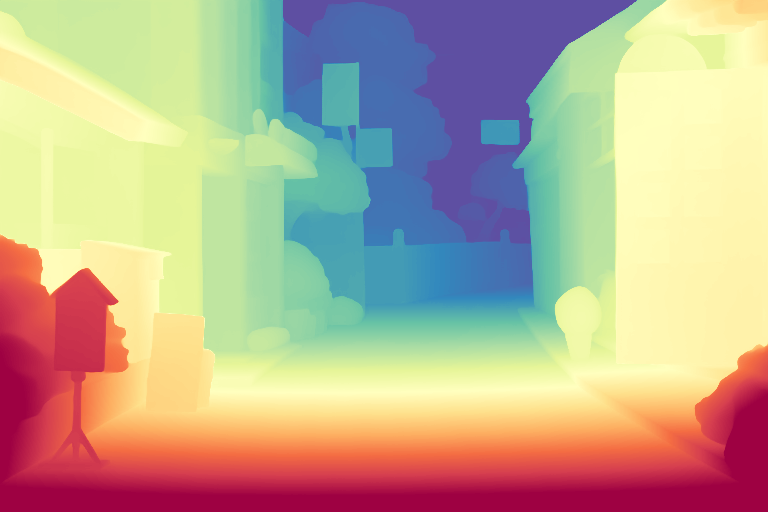

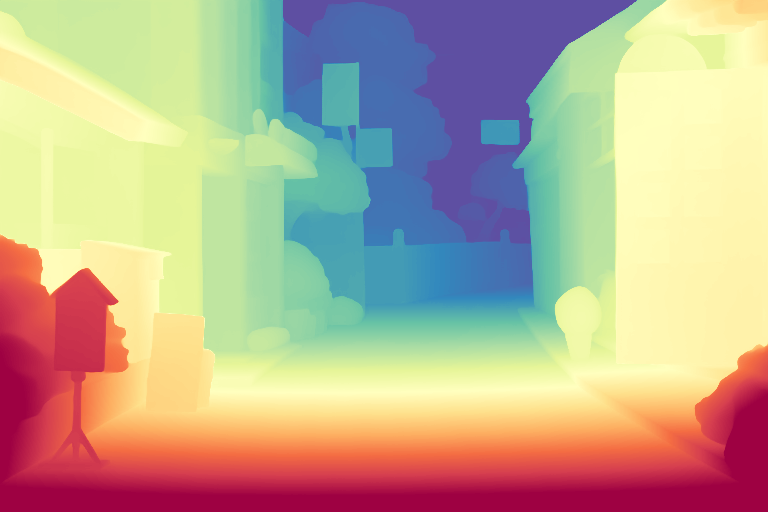

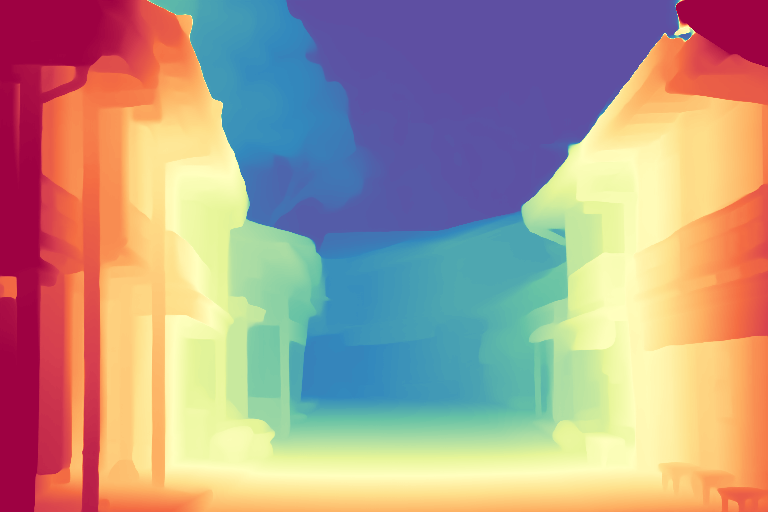

Orchid can also jointly generate depth and surface normals from input images with additional image-conditioned finetuning. We visualize below the joint depth and surface normal predictions for input color images, and compare our results to previous work on joint depth-normal prediction using diffusion models: GeoWizard.

We first train a new VAE to jointly encode color, depth and normal to a latent space. We then finetune a pretrained latent diffusion model for text-conditioned denoising on the joint latent space. We use a distillation loss during the VAE training to ensure that the joint latent space is structurally similar to the color-only latent used for pretraining.

The trained Orchid model can be readily used to generate color, depth and normals from text (a). For image-conditioned depth and normal prediction (b), we further finetune Orchid with an additional color-only latent input, while diffusing the same joint latent.

@misc{krishnan2025orchid,

title={Orchid: Image Latent Diffusion for Joint Appearance and Geometry Generation},

author={Akshay Krishnan and Xinchen Yan and Vincent Casser and Abhijit Kundu},

year={2025},

eprint={2501.13087},

archivePrefix={arXiv},

primaryClass={cs.CV}

}